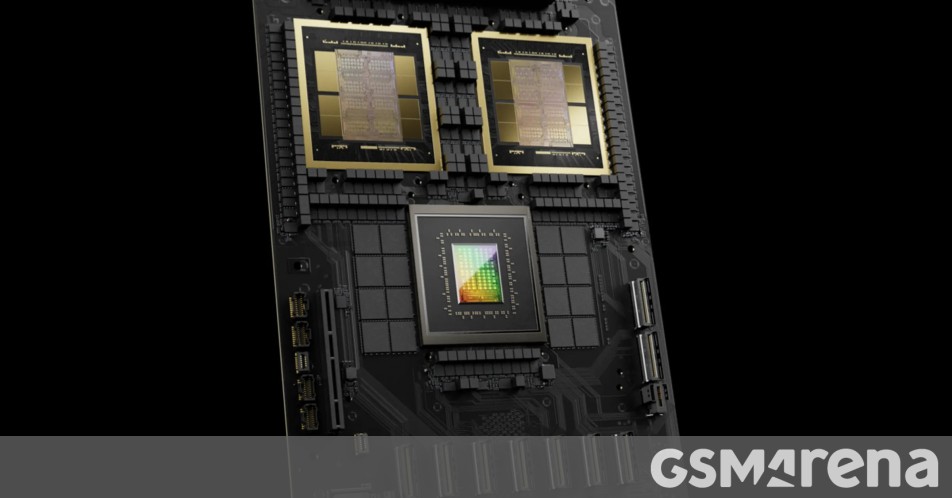

Throughout its GPU Expertise Convention, Nvidia introduced the world’s strongest chip for AI-related computing known as GB200 powering up the Blackwell B200 GPU. It is a successor to the H100 AI chip and affords enormous enhancements in efficiency and effectivity.

The brand new B200 GPU is able to 20 petaflops of FP4 because of the 208 billion transistors contained in the chip. Moreover, the GB200 has 30 occasions the efficiency of H100 in LLM inference workloads whereas decreasing the power consumption 25-fold. Within the GPT-3 LLM benchmark, the GB200 is seven occasions quicker than the H100 too.

As an example, coaching a mannequin with 1.8 trillion parameters would require 8,000 Hopper GPUs and about 15 megawatts, whereas a set of two,000 Blackwell GPUs can do this for simply 4 megawatts.

To additional enhance effectivity, Nvidia designed a brand new community swap chip with 50 billion transistors that may deal with 576 GPUs and allow them to speak to one another at 1.8 TB/s of bidirectional bandwidth.

This fashion, Nvidia tackled a difficulty with communication as beforehand, a system that mixes 16 GPUs would spend 60% of the time speaking and 40% of the time computing.

Nvidia says it is providing corporations a whole answer. As an example, the GB200 NVL72 permits for 36 CPUs and 72 GPUs right into a single liquid-cooled rack. A DGX Superpod for DGX GB200, then again, combines eight of these techniques into one, which makes 288 CPUs and 576 GPUs with 240TB of reminiscence.

Corporations like Oracle, Amazon, Google and Microsoft have already shared plans to combine the NVL72 racks for his or her cloud providers.

The GPU structure used for the Blackwell B200 GPU will probably be the muse of the upcoming RTX 5000 collection.

Trending Merchandise